My 1997 Ph.D. thesis explored the use of agent-based collaboration to solve the intricate problem of cable harness routing. While this might seem highly technical, the concepts it introduced have broad relevance—especially today, when we think about how to build systems that help people connect meaningfully in our increasingly digital world.

At its core, a cable harness is a bundle of wires that connects various electronic components, enabling them to function together within a system. Imagine the wiring inside your car or a plane, where everything needs to be connected precisely to ensure proper operation. The challenge in designing a cable harness is not just connecting the points but doing so in a way that minimizes costs, avoids obstacles, and ensures reliability. This technical problem, while specific, serves as a powerful metaphor for how we might think about connecting people in complex social networks or digital platforms.

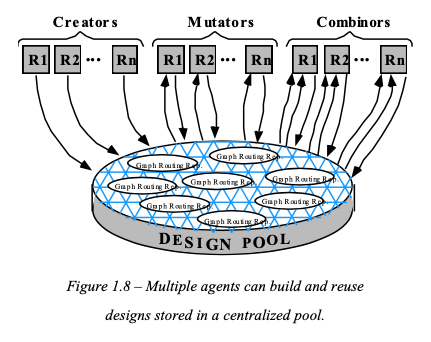

One of the key ideas in my thesis was breaking down this complex routing problem into more manageable subproblems. Different specialized agents—each with its own focus—would work on separate aspects of the design. Creator agents would generate initial designs, mutator agents would tweak and improve these designs, and combiner agents would merge the best parts of different solutions. This approach made it possible to tackle the enormous search space more efficiently, and I believe it has parallels in how we can connect people in meaningful ways.

Figure 1: This diagram illustrates the collaborative effort of multiple agents working together in a centralized design pool. Each agent contributes to finding the best solution by generating, modifying, or combining different design fragments. This mirrors how different models or algorithms might work together in a digital platform to optimize the connections between people.

In the digital age, connecting people efficiently and meaningfully is a complex task—much like solving a combinatorial problem with a vast number of possibilities. Social interactions, like cable harnesses, involve numerous connections that need to be optimized to ensure effective communication and collaboration. Just as the agents in my thesis shared design fragments to be reused and recombined, individuals in a social context share experiences, ideas, and emotions that are built upon and expanded by others.

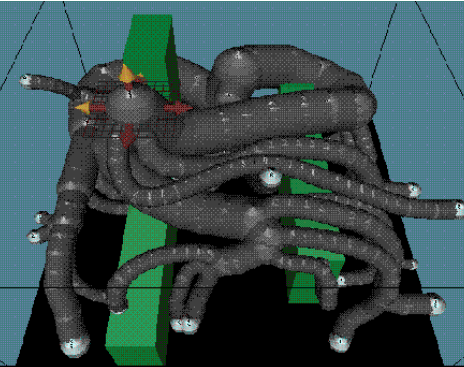

Figure 2: This image demonstrates how a cable harness (or a social connection) can be optimally routed around obstacles. The idea here is to find the best possible way to connect three points while navigating around barriers. In a social context, this could represent how people or ideas connect despite challenges or differences.

The genetic algorithms used in the thesis, where design fragments from the best solutions were selected and recombined, are akin to how social interactions evolve. Patterns of successful human connection, once identified, can be reused and adapted to different contexts, optimizing the ways people connect online. This process of refinement and recombination can be seen as a model for how digital platforms evolve to better serve their users.

Figure 3: This 3D model shows the complexity involved in connecting ten points or locations. Here, the human designer is actively making modifications, highlighting the importance of human input in complex systems. Just as in cable routing, where a designer adjusts the layout to optimize the connections, human intervention is crucial in fine-tuning social connections on digital platforms.

At a more abstract level, the routing problem can be likened to a knowledge tree, where nodes represent concepts or partial designs, and the connections represent relationships or transformations. The goal is to find the optimal paths through this tree—just as we might seek to connect people based on shared interests, goals, or experiences.

Similarly, the knowledge within a large language model (LLM) forms an enormous, implicit knowledge graph. The model traverses this graph to generate responses, just as social platforms navigate through users' data to foster connections. In this sense, an LLM is already a type of "combiner agent," one that flexibly recombines its learned knowledge in response to different contexts and goals, enhancing how people connect.

These ideas extend beyond just digital platforms. In business, academia, or even everyday social interactions, the concept of connecting ideas, people, and resources efficiently is increasingly vital. As in the cable harness routing problem, the best solutions often involve not just linear connections but a more holistic integration of multiple paths and nodes.

Reflecting on my work, I realize that the principles of decomposition and specialization that worked for engineering problems can apply broadly to societal issues. For example, community-building efforts often fail because they don’t leverage the unique strengths of individuals or subgroups. Instead of trying to apply a one-size-fits-all approach, we might do better by breaking down the problem and allowing different "agents" (whether they be people, organizations, or technologies) to specialize in what they do best and then combine these efforts to build a cohesive whole.

Additionally, just as in the routing problem, where adjustments often had to be made to account for new constraints or changing environments, in human systems, flexibility and adaptability are crucial. The most successful organizations and communities are those that can adjust to new information, learn from it, and evolve their structures accordingly. This evolutionary approach, guided by principles but responsive to change, seems to be key to both effective engineering and successful human endeavors.

In conclusion, looking back, the core ideas about problem decomposition, specialized agents, and collaborative optimization presented in my thesis are not only relevant to the field of engineering but also provide insights into the design of systems that connect people. The principles that guided the routing of cables can guide us in building platforms that bring people together in more meaningful and effective ways. Ultimately, both cable harnesses and social connections are about creating systems where the whole is greater than the sum of its parts.